How Does AI Detection Work and How Can You Bypass It?

Quick navigation

The Concept of AI Detection

AI detection refers to the use of artificial intelligence techniques and algorithms to verify content created by artificial intelligence systems. This technology is vital for cybersecurity, content moderation, and intellectual property protection.

Why is it so important? Because it ensures authenticity and reliability.

Advanced algorithms power AI detectors, analyzing patterns and behaviors, and looking for attributes that indicate AI-generated content. Comparing these against vast databases of known AI characteristics, they ensure no digital stone is left unturned in the quest for authenticity.

This process helps maintain the integrity of digital platforms.

It fights misinformation. It battles fraud. It prevents unauthorized AI usage.

Essentially, AI detection acts as a guardian and preserves trust in our digital world.

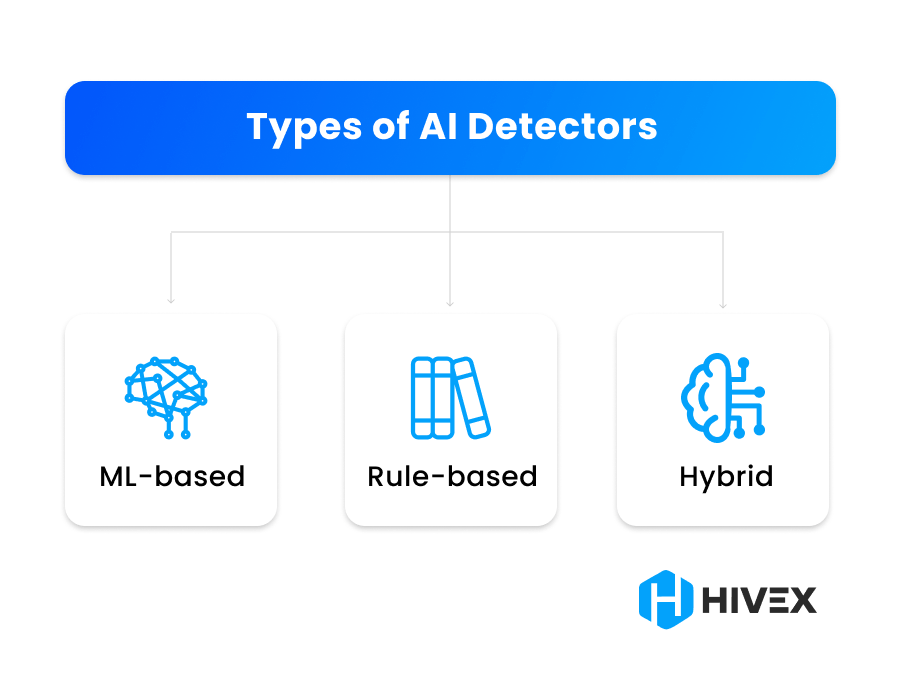

Types of AI Detectors

There are several types of AI detectors, each employing different methodologies to achieve accurate detection.

Machine learning-based detectors utilize vast datasets and complex algorithms to recognize patterns and anomalies indicative of AI-generated content.

Rule-based detectors, on the other hand, rely on predefined rules and heuristics to flag suspicious content.

Hybrid detectors combine both machine learning and rule-based approaches, leveraging the strengths of each to enhance detection accuracy and reduce false positives and negatives.

1. Machine learning-based detectors

Machine learning-based detectors are sophisticated systems that use advanced algorithms to analyze data, identify patterns, and make predictions about whether content is written by a human or artificial intelligence.

These systems continuously learn and adapt to new data, enhancing their accuracy and reliability over time.

The ability of machine learning detectors to handle complex and nuanced tasks makes them super important.

Supervised learning algorithms, for instance, can be trained on labeled datasets, which contain examples of both human and AI-generated content. This training allows the model to learn the distinguishing features of each category, making accurate classifications possible.

On the other hand, unsupervised learning can identify hidden patterns in data without explicit labels, which is particularly useful for detecting novel types of AI-generated content that may not fit established categories.

But they face challenges:

- they need large datasets

- they risk overfitting

- they require regular updates

AI techniques continue to advance, and detectors must keep pace. This makes them extremely important yet demanding.

2. Rule-based detectors

Rule-based detectors are a fundamental approach to AI detection systems, relying on predefined rules and patterns to identify AI-generated content.

These systems use a series of if-then statements to analyze and classify content based on specific traits.

For instance, a rule-based detector might flag text that frequently uses certain keywords. It might also detect a lack of human-like variability or syntactic structures commonly found in machine-generated text. This method works well when AI-generated content is predictable and consistent.

One major advantage of rule-based detectors is their simplicity.

The detection logic is clear: it is based on explicit rules, making it easy to understand, debug, and modify. This adaptability allows quick adjustments to new types of content or changing requirements.

However, simplicity can be a double-edged sword. These detectors may falter with sophisticated AI-generated content. Such content often does not follow easily identifiable patterns, leading to higher false positive or negative rates.

Despite these challenges, rule-based detectors remain vital. They are essential in many AI detection frameworks. They excel when paired with advanced machine learning methods, forming hybrid systems.

By combining simple rules with machine learning, hybrid systems are more accurate and robust. Using both methodologies, this integrated approach delivers a comprehensive AI detection solution.

3. Hybrid detectors

Hybrid detectors combine the strengths of both machine learning-based and rule-based detection systems to provide a more robust and comprehensive approach to AI content detection.

Machine learning is very adaptive, it is constantly learning and advancing. Rule-based systems perform well at precision, applying strict criteria consistently.

By leveraging their strengths, hybrid detectors address a wider range of detection challenges and improve overall accuracy. This approach is particularly effective in handling diverse types of AI-generated content, which may vary in complexity and subtlety.

One of the key advantages of hybrid detectors is their ability to balance false positives and false negatives.

Machine learning algorithms can identify patterns and anomalies that may not be explicitly defined in rule-based systems, thus catching novel or evolving AI-generated content.

Yet, rule-based components bring their own strengths. They enforce strict criteria, ensuring precision for specific content types.

This synergy enables hybrid detectors to be more resilient against evasion techniques used by sophisticated AI systems, making them a valuable tool in the ongoing battle against AI-generated misinformation and other forms of malicious content.

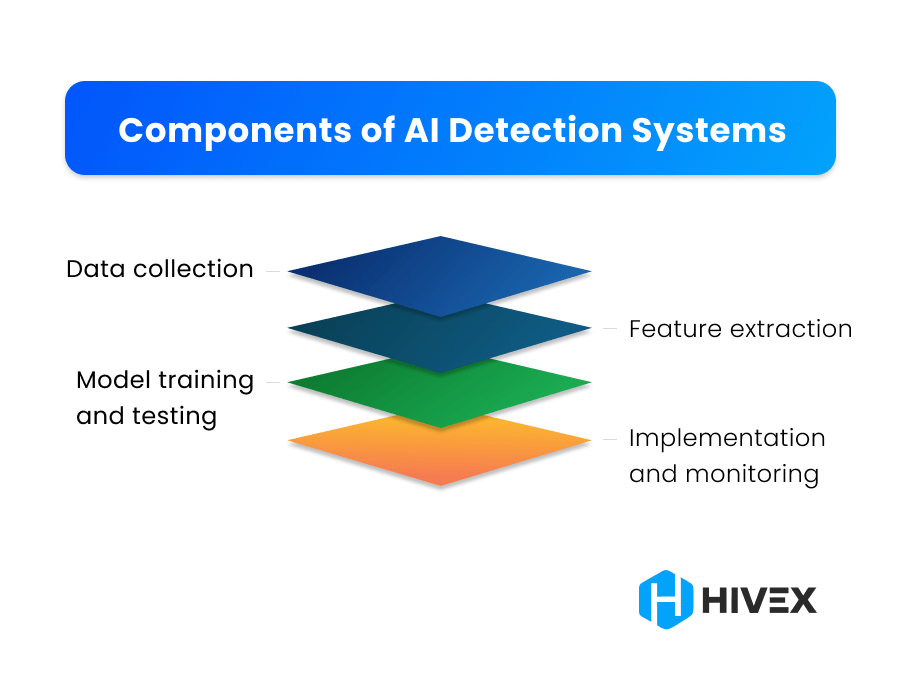

Components of AI Detection Systems

AI detection systems are built from several components that work together to identify and analyze AI-generated content effectively. They include data collection, feature extraction, model training and testing, and implementation and monitoring.

Each component plays an important role in ensuring the accuracy and efficiency of the detection process, allowing the system to adapt and respond to new types of AI-generated content as they emerge. Let’s take a closer look at each of them.

1. Data collection

Data collection is a foundational step in the development of AI detection systems, as the quality and relevance of the data directly impact the effectiveness of the detection models.

In the context of AI content detection, data collection involves gathering a diverse and representative dataset of both AI-generated and human-generated content.

This dataset serves as the training ground for machine learning algorithms, enabling them to learn the distinguishing features and patterns associated with AI-generated content.

To ensure comprehensive data collection, sources may include social media platforms, news websites, academic databases, and content-sharing sites.

Each of these sources can provide valuable examples of AI-generated content, ranging from deep fake videos and synthetic text to AI-generated images and audio.

A balanced dataset is vital. It should encompass various types of AI content and include materials from different languages, cultures, and contexts.

Also, collecting metadata (like timestamps, authorship details, and content interactions) enriches the dataset, providing additional dimensions for analysis.

2. Feature extraction

Feature extraction is the bridge between raw data and machine learning models. This essential process converts unstructured information into a structured format that models can readily use.

It involves identifying and selecting key characteristics from the data, crucial for detecting patterns and anomalies. These features range from simple textual elements like word frequencies, syntax, and grammar, to complex attributes such as semantic meaning and contextual relevance.

Effective feature extraction enhances the performance of AI detection systems by providing models with the most informative and discriminative attributes of the data.

This step often involves natural language processing techniques to analyze and quantify textual data.

Techniques such as tokenization, stemming, lemmatization, and part-of-speech tagging are commonly used to break down text into manageable components and extract meaningful features.

3. Model training and testing

Creating effective AI detection systems revolves around two key stages: training and testing.

In the training phase, machine learning algorithms are exposed to extensive datasets. These datasets, containing both genuine and AI-generated content, are meticulously labeled to ensure the model learns to differentiate between the two.

The goal is to reduce error rates during training and improve classification accuracy for new, unseen data.

This phase employs several techniques:

- Cross-validation, which ensures the model performs consistently across various data subsets

- Hyperparameter tuning, which further refines the performance of the model.

Testing follows and is crucial for evaluation. The trained model is applied to a separate validation dataset, untouched during training, to assess its accuracy, precision, and recall.

This step highlights any weaknesses or biases, providing critical insights for refinement.

However, testing is not a one-time task.

Rigorous testing is fundamental to ensuring reliability and robustness. Before deployment in real-world scenarios, continuous monitoring and periodic retraining are necessary.

This ongoing process guarantees the model’s effectiveness, adapting to the nature of AI-generated content, and ensuring it stays ahead of new challenges.

4. Implementation and monitoring

Implementing and monitoring AI detection systems are critical phases, ensuring these systems function effectively and adapt to new challenges.

Once an AI detection model is developed and trained, the next crucial step is integration into the operational environment.

Here, it must analyze incoming data in real-time or near-real-time. This requires deploying the model on appropriate hardware, ensuring it has access to the necessary data streams, and configuring it for optimal performance.

The goal is to achieve this without significant latency or resource drain.

Monitoring is an ongoing process, though. It involves continuously tracking the performance of the AI detection system to maintain high accuracy and efficiency.

It’s necessary to regularly review key performance indicators, such as detection rate, false positive rate, and processing speed.

Last but not least, continuous monitoring helps identify any drift in the model’s performance due to changes in the type or nature of AI-generated content. By implementing feedback loops, the detection system can be periodically retrained with new data.

Read also: How is AI used in FinTech?

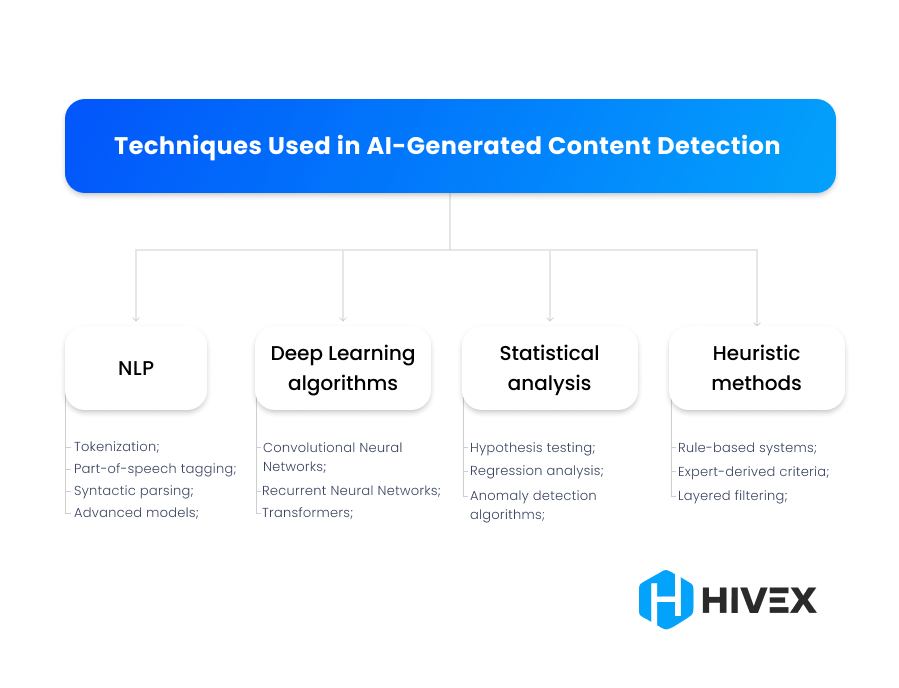

Techniques Used in AI-Generated Content Detection

The techniques employed in AI detection are crucial for accurately identifying AI-generated content and mitigating its impact. They include NLP, deep learning, statistical analysis, and heuristic methods to identify AI-generated content. Let’s explore them.

1. Natural Language Processing (NLP)

NLP is a key technique in AI detection, particularly for identifying AI-generated text. It uses diverse algorithms to process and analyze human language.

Techniques such as tokenization, part-of-speech tagging, and syntactic parsing help break down and understand text. Advanced models like BERT and GPT can identify subtle nuances in language that may indicate artificial generation, such as unusual word patterns or inconsistencies in context.

Here are some examples:

- Tokenization

This process involves breaking down a sentence into individual words or tokens.

For example, the sentence “AI-generated text can be detected” would be tokenized into [“AI-generated”, “text”, “can”, “be”, “detected”].

This helps in analyzing the structure of the text.

- Part-of-speech tagging

This involves identifying the parts of speech for each word in a sentence, such as nouns, verbs, adjectives, and so on.

For instance, in the sentence “AI-generated text can be detected,” “AI-generated” would be tagged as an adjective, “text” as a noun, “can” as a modal verb, “be” as a verb, and “detected” as a verb.

Unusual patterns in part-of-speech tagging can indicate AI generation.

- Syntactic parsing

This technique involves analyzing the grammatical structure of a sentence.

For example, parsing the sentence “The cat sat on the mat” would help us understand the subject (cat), the verb (sat), and the prepositional phrase (on the mat).

If the structure of sentences is consistently unusual or incorrect, it may suggest AI generation.

- Advanced models (like BERT and GPT)

These models understand and generate human-like text.

For example, BERT can be used to identify whether the context of a sentence makes sense, while GPT can detect if the text follows human-like language patterns or if there are unusual word combinations that a human is less likely to use.

2. Deep Learning algorithms

Deep learning algorithms (a subset of machine learning) are neural networks that learn complex patterns and features from vast datasets.

Convolutional Neural Networks (CNNs) are used for image and video detection, while Recurrent Neural Networks (RNNs) and Transformers are effective for text and speech analysis.

By training on large amounts of data, deep learning models can identify subtle indicators of AI generation that may be missed by traditional methods.

Sounds complicated, right? Let us explain:

- Convolutional Neural Networks

CNNs are basically used to analyze images and videos. For example, a CNN can detect if an image has been artificially generated by examining pixel patterns and features that might be too perfect or repetitive, which are signs of AI generation.

- Recurrent Neural Networks

RNNs are effective for analyzing sequences, such as text or speech.

For example, they can be used to detect AI-generated text by analyzing the sequence of words and identifying patterns that are too consistent or lack the variability found in human writing.

- Transformers

These models are the best at understanding context and generating language.

They can be used to detect AI-generated text by analyzing the coherence and consistency of long passages. If the text has sections that seem disjointed or overly consistent in tone and style, it might be AI-generated.

3. Statistical analysis

Statistical analysis involves the application of statistical methods to detect anomalies and inconsistencies in data.

Techniques such as hypothesis testing, regression analysis, and anomaly detection algorithms can identify deviations from expected norms.

In AI detection, statistical methods uncover different irregularities in data distributions or frequency patterns that suggest artificial manipulation or generation.

- Hypothesis testing

Hypothesis testing involves making an assumption about a dataset and testing if the data supports it.

For example, you might hypothesize that a piece of text has an average word sentence length that is typical of human writing.

If the text significantly differs from this average, it might be AI-generated.

- Regression analysis

This technique examines relationships between variables.

For example, you could use regression analysis to check if the complexity of sentence structures in a text matches what is expected of human writing.

Significant differences could indicate AI generation.

- Anomaly detection algorithms

These algos identify data points that differ significantly from the norm.

In a large dataset of human-written text, the AI-generated one might have unusual frequency patterns of certain words or phrases, which can be detected using these algorithms.

4. Heuristic methods

Heuristic methods rely on rules and strategies derived from expert knowledge to detect AI content.

These methods include rule-based systems where predefined criteria are used to flag suspicious content.

Heuristics can be particularly useful in combination with other techniques, providing a first layer of filtering that can catch obvious cases of AI generation before applying more computation-intensive techniques.

A closer look:

- Rule-based systems

Rule-based systems use predefined rules to flag suspicious content.

For example, a rule might be that if ai written text contains an unusually high frequency of rare words or specific syntactic patterns, it should be flagged for further analysis.

- Expert-derived criteria

These are rules based on expert knowledge.

For instance, experts might determine that text generated by AI often includes very uniform sentence lengths or lacks sentence structure variation.

Content meeting these criteria could be flagged as suspicious.

- Layered filtering

This approach uses heuristics as a first filter before applying more complex methods.

Simple heuristic rules might flag obvious cases of AI-generated content, such as text with repetitive phrases or unnatural language, which can then be further analyzed using NLP or deep learning algorithms.

The combination of these techniques can identify AI-generated content and mitigate its impact.

Challenges in AI Detection

No doubt, AI detection is important. However, it faces many difficulties that affect its accuracy and effectiveness. They come from the fast growth of AI technology, the difficulty of telling apart human and AI-created content, and several technical, ethical, and practical issues.

Evasion techniques

One big challenge in AI detection is that creators of AI-generated content keep finding new ways to avoid detection.

They design techniques to make their content look more like human-made content or add small changes that are hard to notice.

For example, some methods slightly alter content so that it can trick AI detectors, even if humans can’t see the difference. AI content becomes more advanced so detection systems need to keep improving to catch these new tricks.

False positives and negatives

False positive happens when a system wrongly identifies human-made content as AI-generated, which can lead to unnecessary problems or censorship.

A false negative happens when the system misses AI-generated content, letting it pass unnoticed.

Balancing these errors is tricky because fixing one can make the other worse. It’s crucial to keep both errors low to ensure AI detection systems are reliable and trustworthy.

Scalability

With the rapid increase in online content, AI detection systems need to handle and analyze large amounts of data quickly.

This requires powerful computers and efficient algorithms that work well even with lots of data.

Also, these systems need to adapt to different languages and types of content, which makes their design and maintenance even more complex.

Ethical and privacy concerns

Collecting and analyzing data can raise privacy issues, especially when it involves personal or sensitive information.

There is also the risk that these systems could be misused for surveillance or censorship beyond their intended purpose.

AI Detectors in Content Generation

AI detectors have a big role to play in content generation, tackling issues from misinformation to content moderation. These advanced technologies ensure digital content integrity and authenticity across diverse platforms.

Let’s explore some of its popular applications:

Fighting fake news

A significant application of AI detectors lies in combating fake news. With the growing spread of misinformation, AI detectors utilize natural language processing and machine learning to scrutinize text for signs of fabrication.

They cross-reference content from reliable sources and examine information consistency. When they detect discrepancies, they flag potentially false news stories. This process helps prevent the spread of misinformation, thereby increasing public trust in the media.

Here are a few examples so you can see how it works:

1) AI detector scans a news article -> uses NLP to break down the text into smaller parts -> checks these parts against a database of verified news sources -> looks for inconsistencies or unusual patterns -> flags the article for human review if suspicious elements are found.

2) A social media post is uploaded -> AI detector scans the post for content -> compares information with trusted news sources and fact-checking websites -> checks tone and style for signs of propaganda or sensationalism -> flags the post if potentially fake, may warn users or limit reach until verified.

Spotting plagiarism

AI detectors are also essential in the academic and publishing fields for identifying plagiarism. They compare submissions against an expansive database of existing literature, spotting similarities and potential theft.

Advanced algorithms go further, detecting paraphrasing and subtle rewording, ensuring intellectual property is safeguarded, and original authors are duly credited.

Let’s imagine a situation:

A student submits a paper to an online plagiarism checker -> AI detector scans text and splits it into sections -> compares sections to a database of academic publications and online content -> highlights sentences or paragraphs that closely match other works -> generates a report showing potential plagiarism with links to original sources.

Monitoring social media

Social media platforms utilize AI detectors to monitor and manage user-generated content.

These systems monitor the vast ocean of user-generated content – billions of posts, comments, and messages each day.

By analyzing text, images, and videos, they identify and weed out harmful content like hate speech, harassment, and spam (made mainly by fake accounts).

They enforce community standards, fostering safer digital spaces and also help uncover coordinated misinformation campaigns.

Here are some great examples to illustrate the process:

Hate speech

Users post comments and messages -> AI detector scans new posts in real-time -> uses NLP to identify words and phrases associated with hate speech -> analyzes context to determine if language is harmful or offensive -> removes the post and warns the user or escalates for human review if hate speech is detected.

Spam bots

The platform monitors user activity patterns -> AI detector looks for signs of spam behavior like repeated or rapid posting -> compares patterns against known spam bot activities -> temporarily restricts account and flags for investigation if behavior matches -> platform decides on further action, such as banning the account.

Compliance and content moderation

Industries like finance, healthcare, and advertising also rely on AI detectors to ensure regulatory compliance.

In advertising, these detectors validate that promotional content meets legal and ethical standards, curbing deceptive claims.

In healthcare, they oversee the distribution of medical information, guaranteeing its accuracy and reliability.

With AI detectors, content moderation helps platforms comply with legal mandates, protect users, and maintain data integrity.

Let’s take this case:

Advertisement is submitted for online display -> AI detector scans ad text and images for product/service claims -> checks claims against regulatory guidelines and product reviews -> looks for exaggerated or false statements -> flags ad for correction or rejection if issues are found.

How to Bypass AI Detection: Common Techniques

There are several techniques that are used to modify content to make it harder for AI detectors to be triggered.

But some of them are more suitable for less advanced AIs and may hurt your SEO. (We are warning you just in case).

1. Altering the word and sentence structure

This involves changing how words are arranged or how sentences are built, like using passive instead of active voice, or rearranging clauses in a sentence.

This way, it changes the “shape” of the sentence while keeping its meaning.

It won’t harm your SEO and is great for detectors that rely on common syntactic patterns, but more advanced AI uses deep learning to understand a wide variety of sentence structures, so there it may be harder.

2. Using synonyms and antonyms

Human touch is always a nice tactic that will increase your chances of bypassing AI.

When you replace certain phrases and use synonyms and parent words (or better “parent keywords”), you can avoid detection and maintain the same message.

But avoid doing it too often as the excessive usage of synonyms and antonyms may make your text look unnatural, which will trigger the detector.

Also, take into account that advanced AI systems trained on large datasets often have a very broad vocabulary and understand words with similar meanings.

3. Adding symbols and punctuation

This method consists of inserting additional symbols and punctuation marks in unexpected places, so that the standard text structure is disrupted. For example, inserting a period in the middle of a word, commas, or special characters within the text.

AI models trained on clean and well-structured text might get tricked, but advanced models are generally resistant to such simple modifications.

4. Using Unicode characters

Computers recognize characters (like letters and numbers) through specific codes. Unicode provides different codes for what can look like the same character to humans.

Using this tactic, people usually replace common characters with their Unicode equivalents to trick AI that primarily matches specific character codes. For example, a Cyrillic ‘A’ looks like an English ‘A’ but is coded differently.

Basic AI systems may treat these as completely different characters due to their codes, preventing AI from recognizing words correctly.

However, advanced systems often convert all characters to standard form before analyzing, so this technique doesn’t work.

So, the effectiveness of this technique depends on the AI model you are using.

5. Obfuscating text

This method involves making text intentionally vague or confusing by adding irrelevant or misleading info. (as if you were trying to hide the true meaning of your message by surrounding it with lots of unrelated data).

AI detectors focus on specific patterns or keywords to understand the content, and by adding misleading or incorrect information, obfuscating text disrupts these patterns.

In case an AI model is not smart enough to differentiate relevant and irrelevant info, it might get confused about what the text is about. But more advanced AI models use context and can often ignore the ‘noise’ or filter out irrelevant data.

(Please never do this if you are a student writing your thesis!)

The effectiveness of these techniques depends on how complex the AI system is. Basic AI tools might be tricked by these methods, but the smarter and the newer ones, which use deep learning, large datasets, and refined normalization processes, can often see through them.

The success rate of each method depends however not on its effectiveness, but rather on particular weaknesses in AI itself.

There are also a lot of software tools that offer “text humanization”, and even though they might seem pretty decent, most of them use a very primitive trick – intentionally making grammatical errors while writing.

Still, the best and safest tactic for bypassing AI detectors is to write content manually.

FAQ

Are detectors good at identifying AI-generated text in different languages?

Detectors are initially designed to work with multiple languages to identify AI-generated text, so yes, for sure. But take into account that their average accuracy usually depends on the language and quality of the training data.

Generally, AI detectors are quite effective in widely spoken languages but might struggle with less common languages due to limited data.

An AI detector should be continuously updated and trained on diverse datasets to improve its performance across different languages.

Can AI detectors be tricked by advanced AI-generated text that mimics human writing styles?

Advanced AI-generated text created by large language models can indeed mimic human-written content very closely. Nevertheless, while they’re getting better at detecting AI generated text, sophisticated AI can sometimes slip through.

These detector tools rely on subtle cues and patterns to identify text written by AI, but as technologies develop, the line between AI and human-generated text becomes more difficult to recognize. So, it’s crucial to keep AI text detectors updated and improved.

Can an AI detector distinguish between AI-generated text and text heavily edited by AI tools?

Generally speaking, any standard AI content detector can generally differentiate between fully AI-generated text and text that has been heavily edited by different AI tools, though it’s becoming more challenging.

The detector primarily looks for consistent patterns typical of AI content. But when a piece of text is only partially edited by AI, it can imitate human-written content more closely, making it more difficult for the AI detector to identify.

What are some common signs that can help detect AI-generated text manually?

To manually detect AI-generated text, look for a few key signs:

- Repetitive phrases or unnatural language

- Lack of emotional depth or personal experiences

- Super perfect grammar or identical sentence structures

- Various inconsistencies within the content

These signs can help you identify AI content, but frankly, using an AI text detector is a better option as it will significantly increase accuracy and save you a lot of time.

How do AI detectors handle multimedia content like images and videos compared to text?

The best part is that AI detectors can analyze not only the text. They can also handle interactive content like pictures and videos.

The difference is that detecting AI-generated text focuses on linguistic patterns, and detecting AI-generated images & videos requires analyzing visual patterns and different anomalies.

These detectors identify manipulated or fake multimedia content by comparing it against known original content patterns. Just like with text, continuous updates and diverse training data are essential here.

You may also like: AI in Recruitment – How it works?